9 Time Series Regression with Correlated Errors

The goal of this lecture is to bring together knowledge about regression, time series trends, and autocorrelation with the goal of obtaining correct inference in regression problems involving time series. You should become capable of suggesting a model that would meet the goal of data analysis (testing certain relationships) while satisfying assumptions (e.g., uncorrelatedness of residuals) and minimizing the risk of spurious results.

Objectives

- Model autocorrelated residuals (in other words, incorporate or account for autocorrelation) so the final residuals satisfy modeling assumptions.

- Apply and interpret Granger causality that is based on time series predictability.

Reading materials

- Chapters 3.8, 5.5, and 5.6 in Shumway and Stoffer (2017)

- Chapter 3 in Kirchgässner and Wolters (2007) on Granger causality

9.1 Introduction

Here we explore a regression model with autocorrelated residuals. We already know how to

- forecast, construct prediction intervals, and test hypotheses about regression coefficients when the residuals satisfy the ordinary least squares (OLS) assumptions, i.e., the residuals are white noise and have a joint normal distribution \(N(0, \sigma^{2})\);

- model serial dependence (i.e., autocorrelation) in stationary univariate time series, such as using ARMA models.

Here we bring these two skills together.

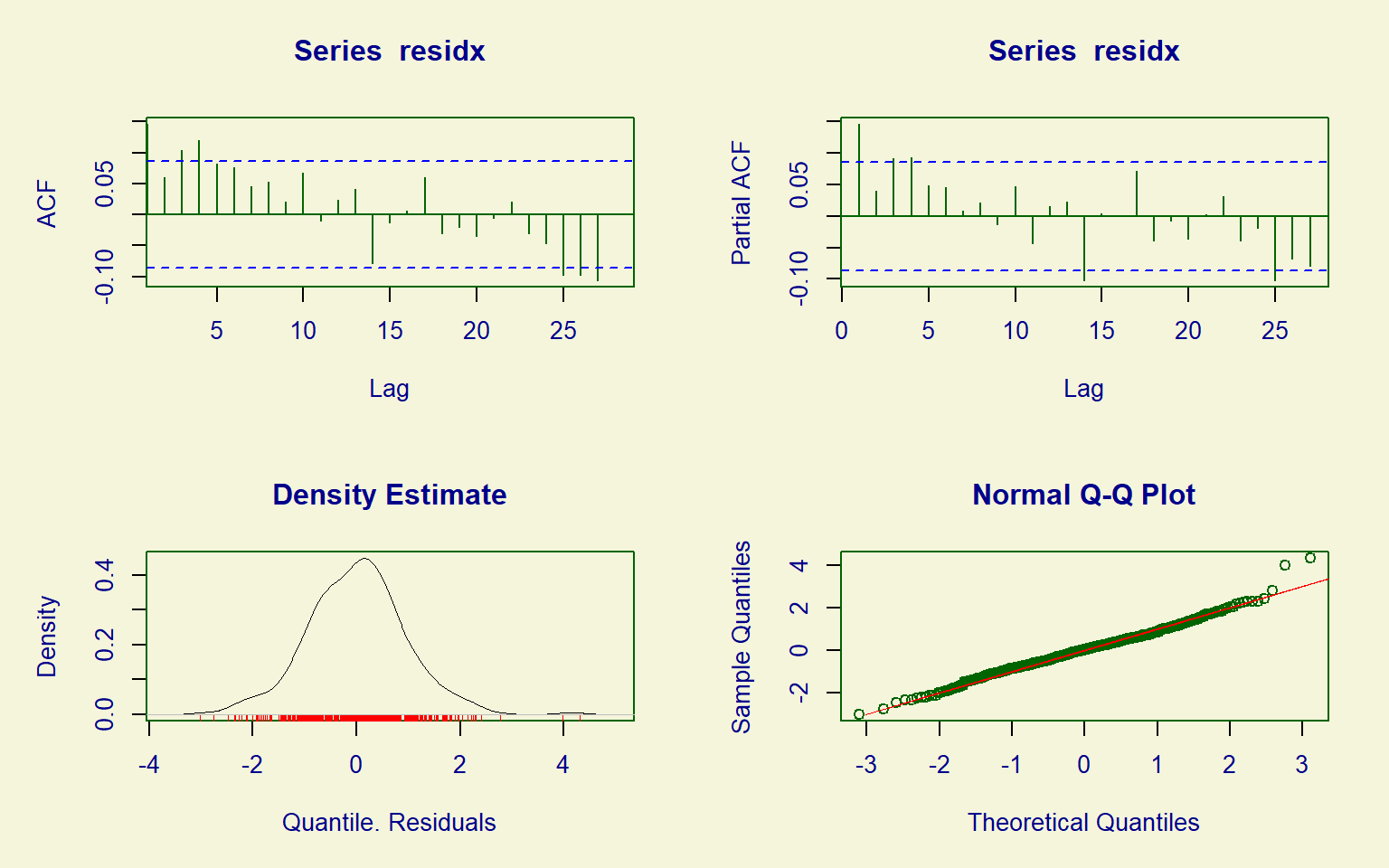

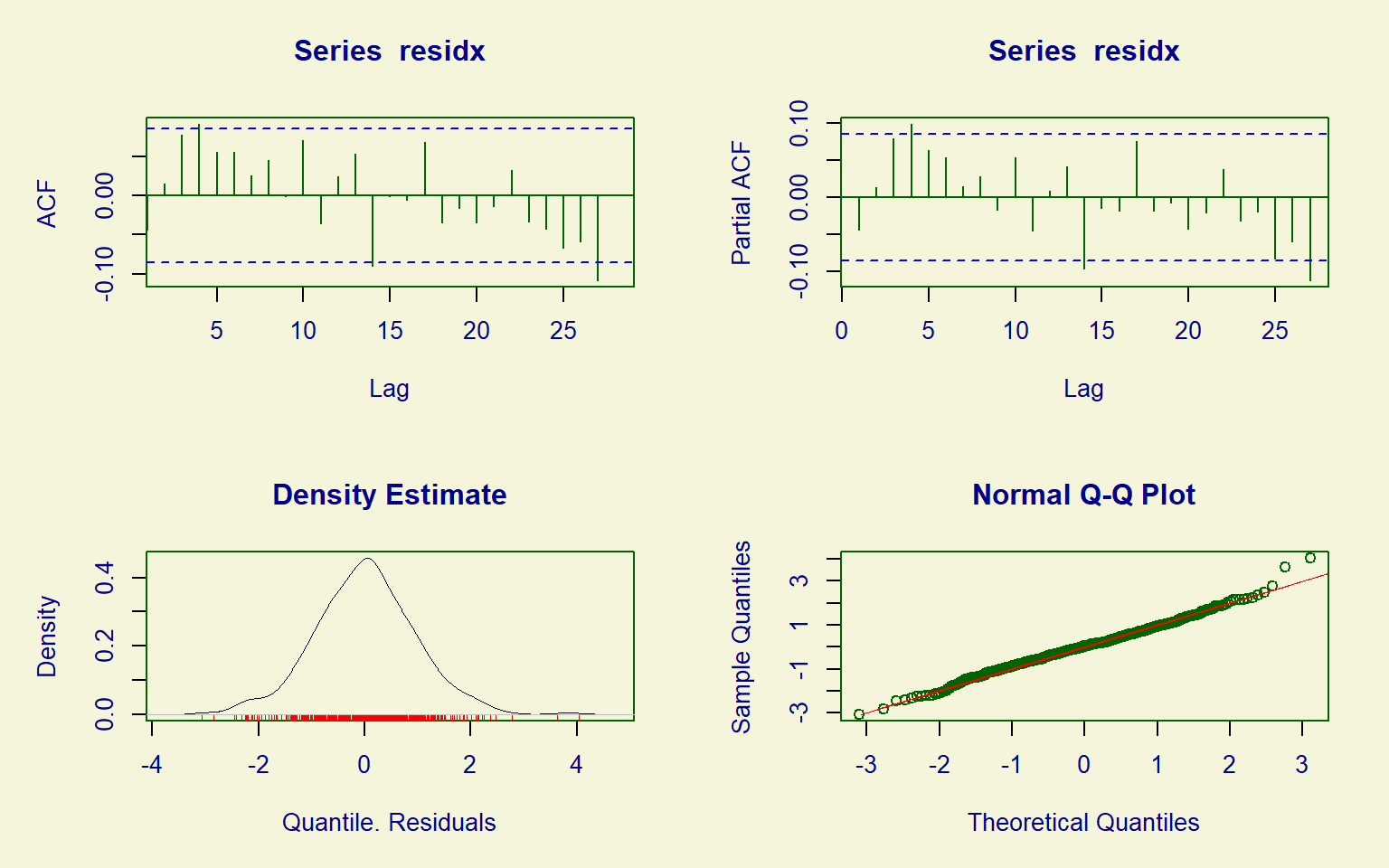

If the residuals do not satisfy the independence assumption, we can sometimes describe them in terms of a specific correlation model involving one or more new parameters and some ‘new residuals.’ These new residuals essentially satisfy the OLS assumptions and can replace the original correlated residuals (which we will sometimes call the ‘old’ residuals) in the model.

When modeling time series, we often want to find so-called leading indicators, i.e., exogenous variables whose lagged values can be used for predicting the response, so we do not need to predict the \(X\)-variables. Hence, our models may include lagged versions of both the response and predictor variables (a general form of such a model was given in Equation 2.2).

9.2 Cross-correlation function

Cross-correlation is a correlation between different time series, typically with a time offset \(k\) (a.k.a. lag): \[ \mathrm{cor}(X_{t+k},Y_t). \tag{9.1}\] (Recall that autocorrelation is also a correlation with a lag present, but for the same time series.) By calculating cross-correlations at each lag \(k = 0, \pm 1, \pm 2, \dots\), we obtain the cross-correlation function (CCF). CCF shows how the correlation coefficient varies with the lag \(k\) and helps us to identify important lags and find leading indicators for predictive models. For example, the relationship between nutrient input and algal blooms in a marine environment is not immediate since the algae need time to reproduce. The correlation between a time series of births and demand for baby formula would be distributed over smaller lags \(l\) \[ \mathrm{cor}(\mathrm{Births}_{t - l}, \mathrm{FormulaDemand}_t) \] than the correlation lags \(L\) between births and school enrollments \[ \mathrm{cor}(\mathrm{Births}_{t - L}, \mathrm{SchoolEnrollment}_t), \] where \(l,L > 0\) and \(l < L\).

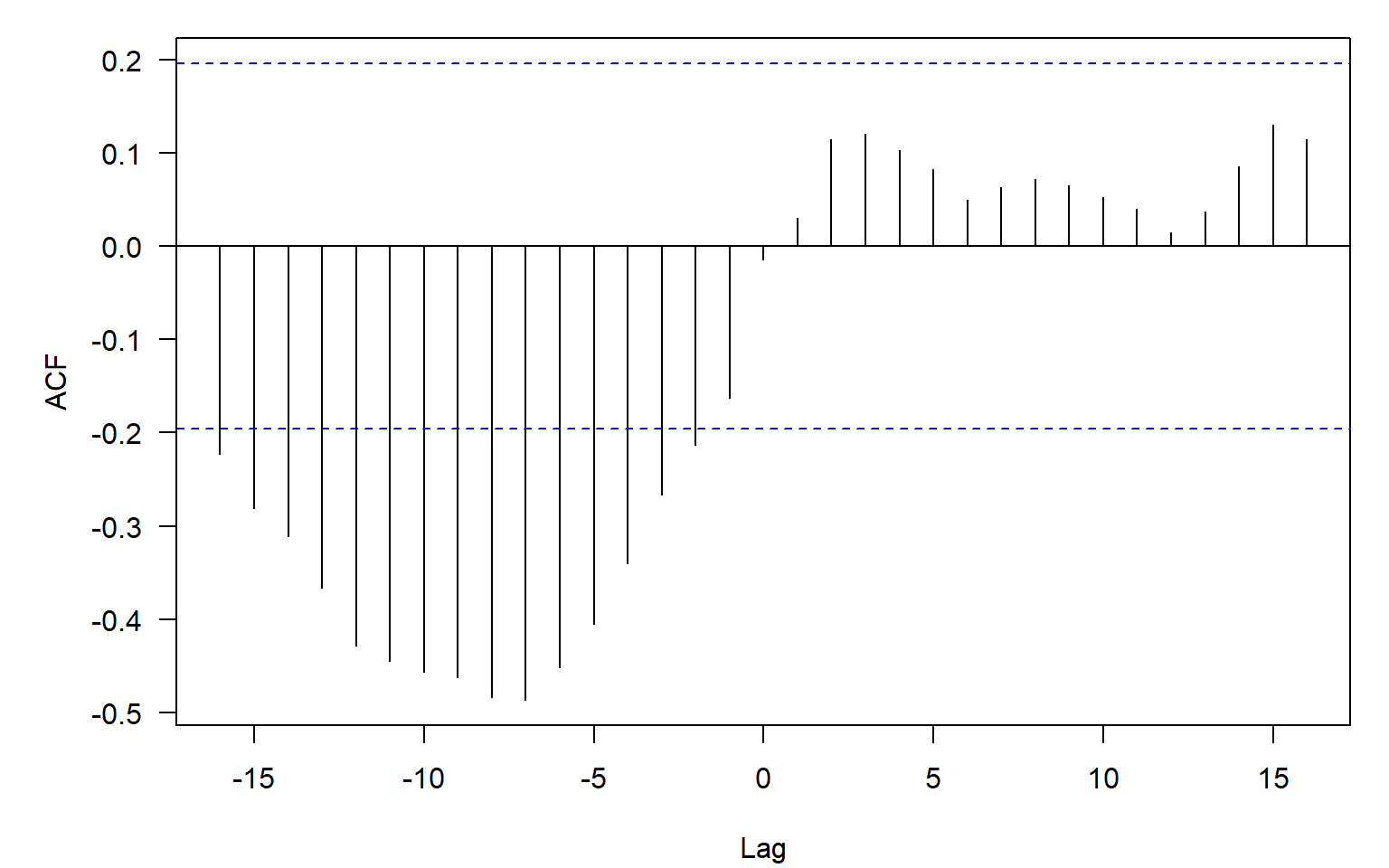

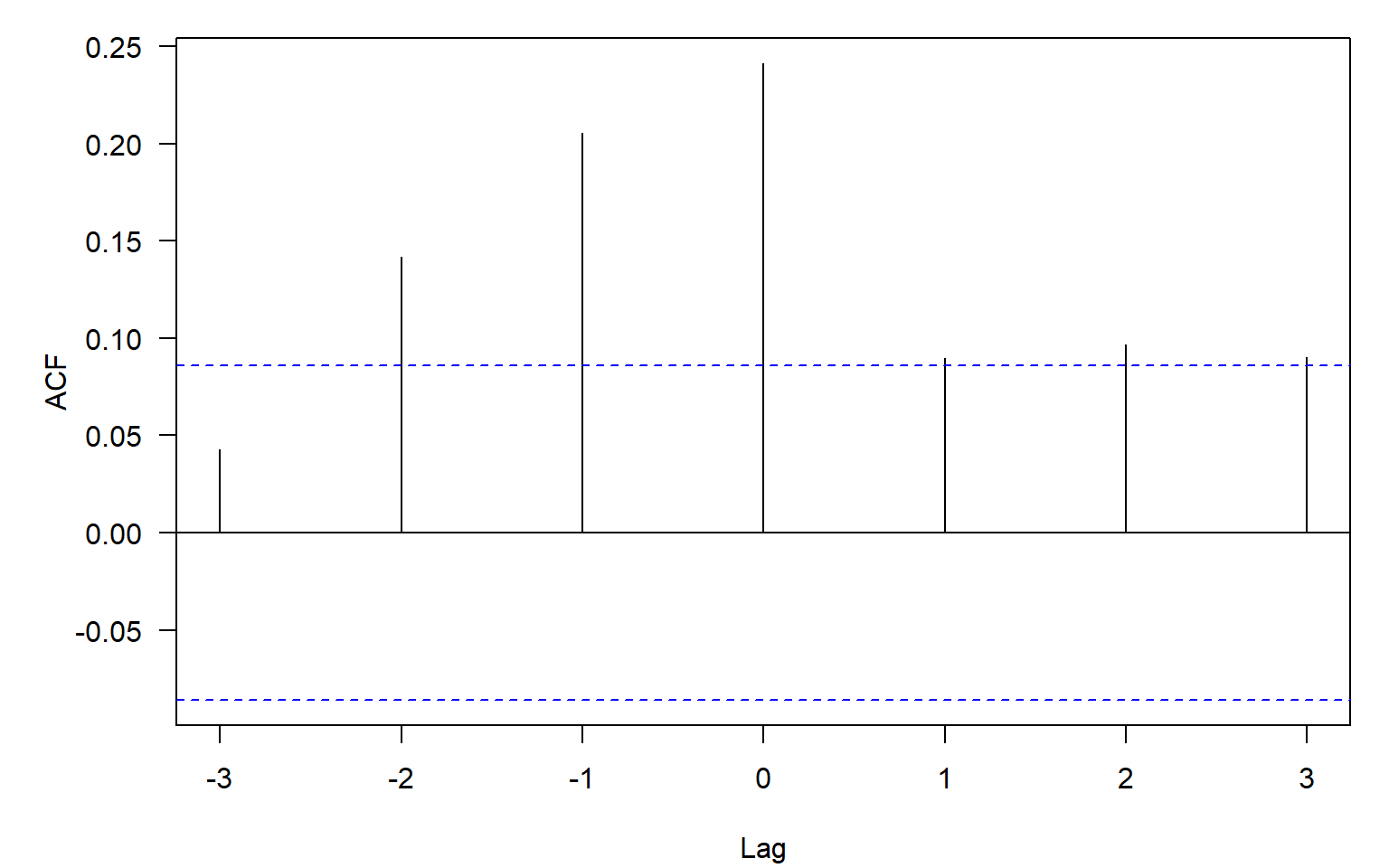

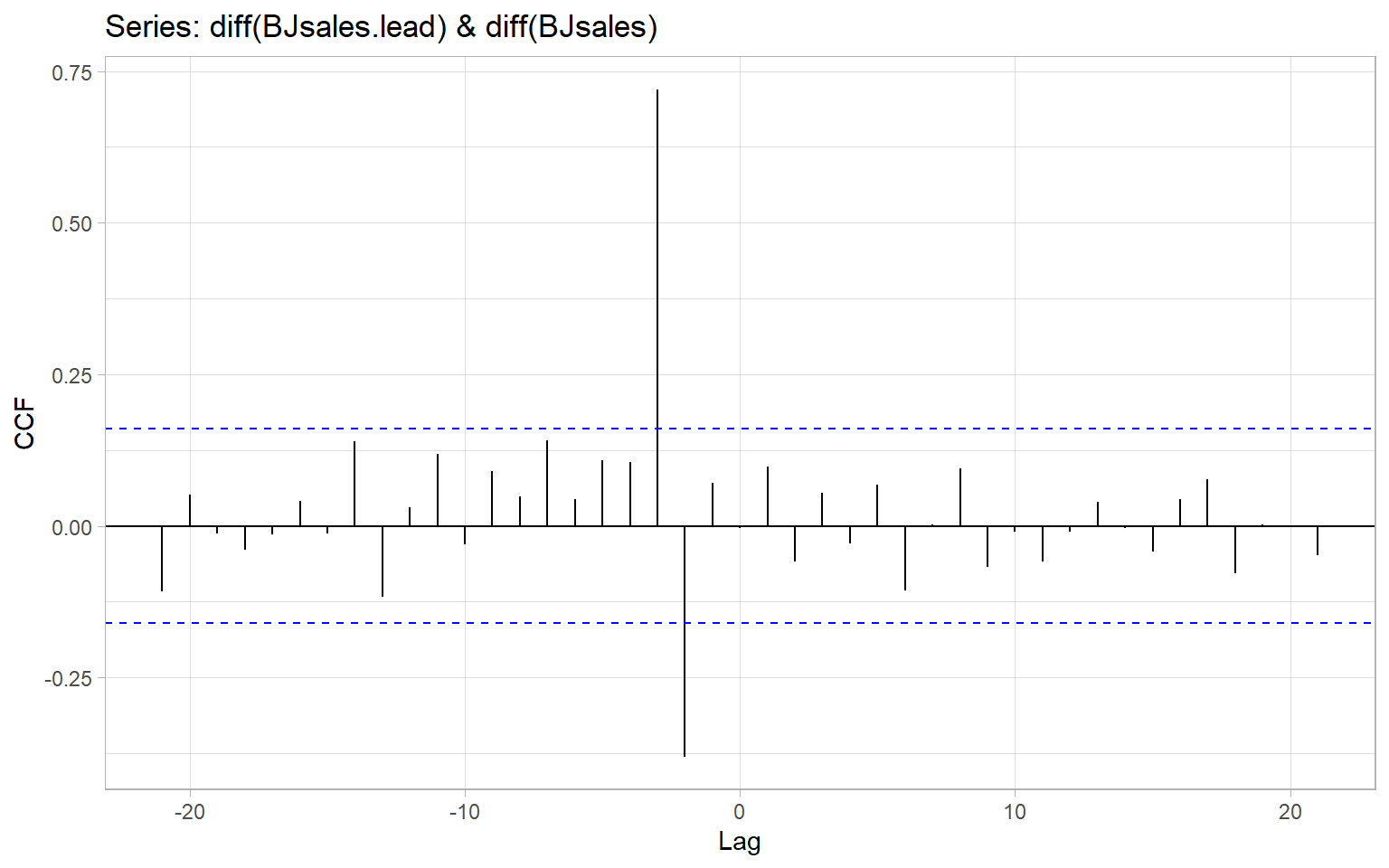

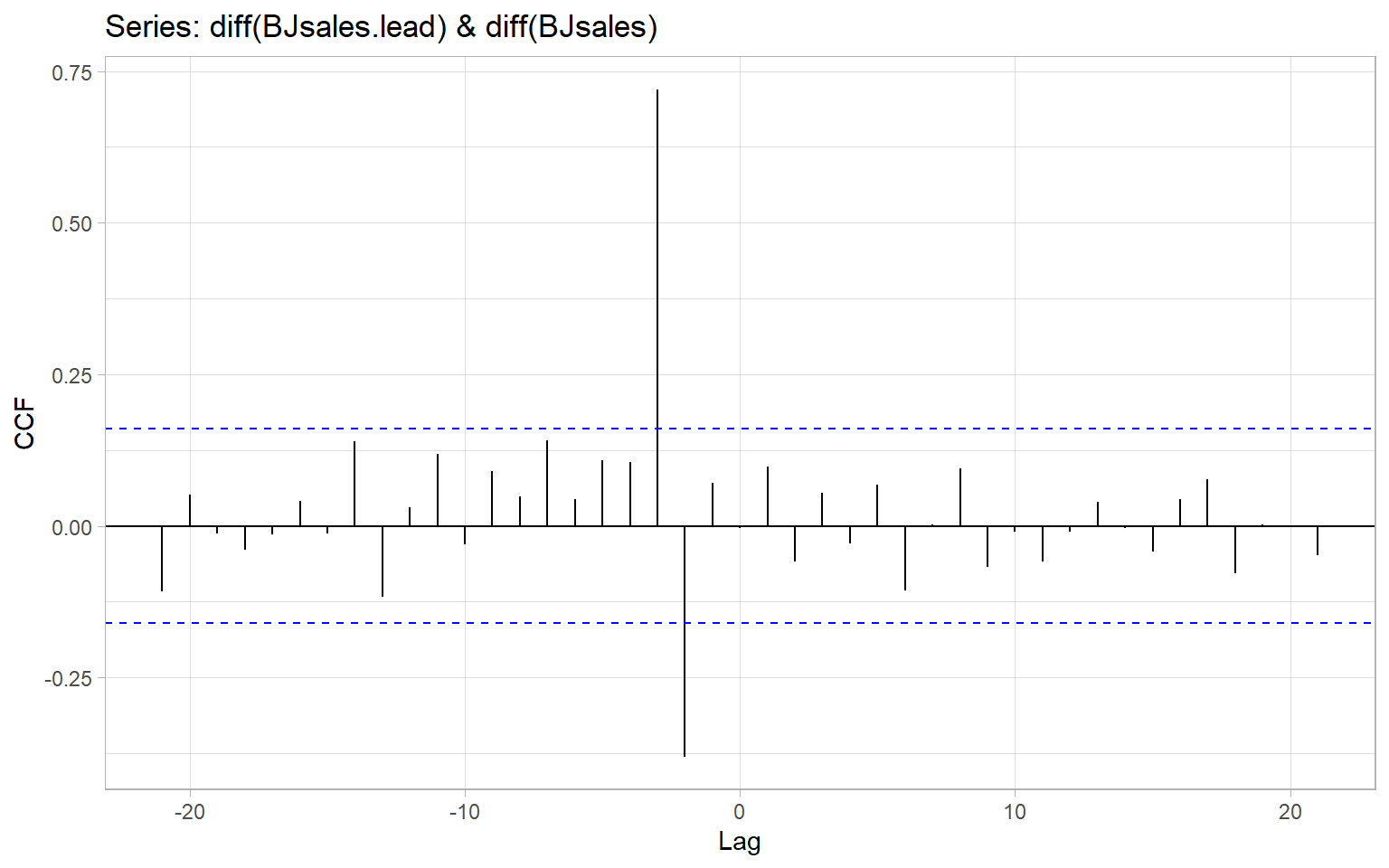

The functions ccf() and forecast::ggCcf() estimate the cross-correlation function and plot it as a base-R or a ggplot2 graph, respectively. The functions use the notations like in Equation 9.1, hence when using ccf(x, y) we are typically interested in identifying negative \(k\)s that correspond to correlation between past values of the predictor \(X_t\) and current values of the response \(Y_t\).

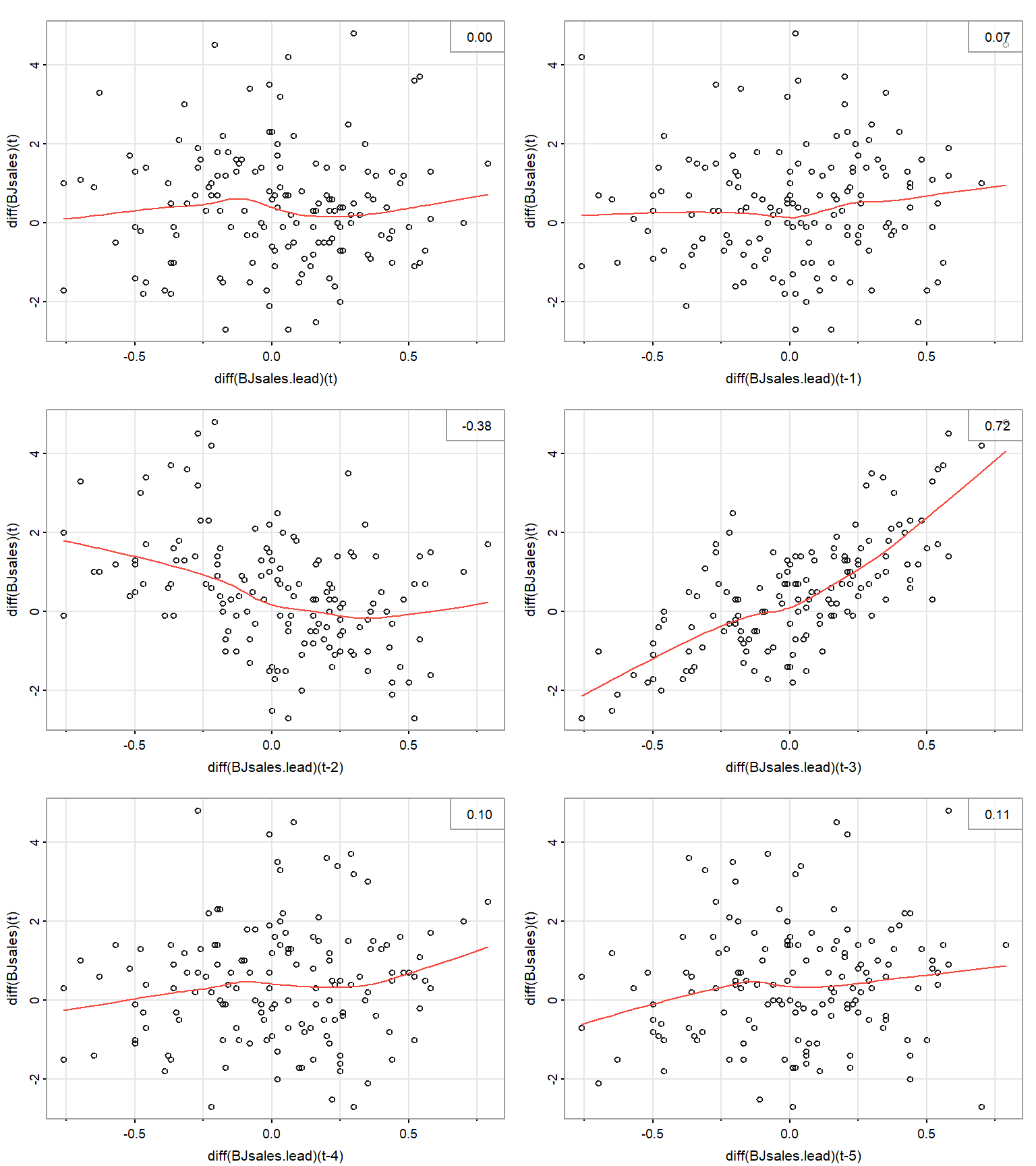

For example, Figure 9.1 shows the CCF for two time series of sales that have been detrended by taking differences. From this plot, lagged values of the first series, \(\Delta \mathrm{BJsales{.}lead}_{t - 2}\) and \(\Delta \mathrm{BJsales{.}lead}_{t - 3}\) are significantly correlated with current values of the second series, \(\Delta \mathrm{BJsales}_{t}\). Hence, we can call BJsales{.}lead a leading indicator for BJsales.

The function ccf() assumes that the two time series are evenly spaced and have no missing data. We can use the argument na.action = na.pass to ignore any missing values.

Note that the 95% confidence band shown in Figure 9.1 is based on how i.i.d. series of length \(n\) would correlate (the same way the band is produced for an autocorrelation function with acf()). However, when \(n\) is small, there are not so many pairs \((X_{t+k}, Y_t)\) to calculate the correlations at large lags, hence the confidence bands should be adjusted by using the smaller \(n'(k) = n - |k|\) to account for this lack of confidence.

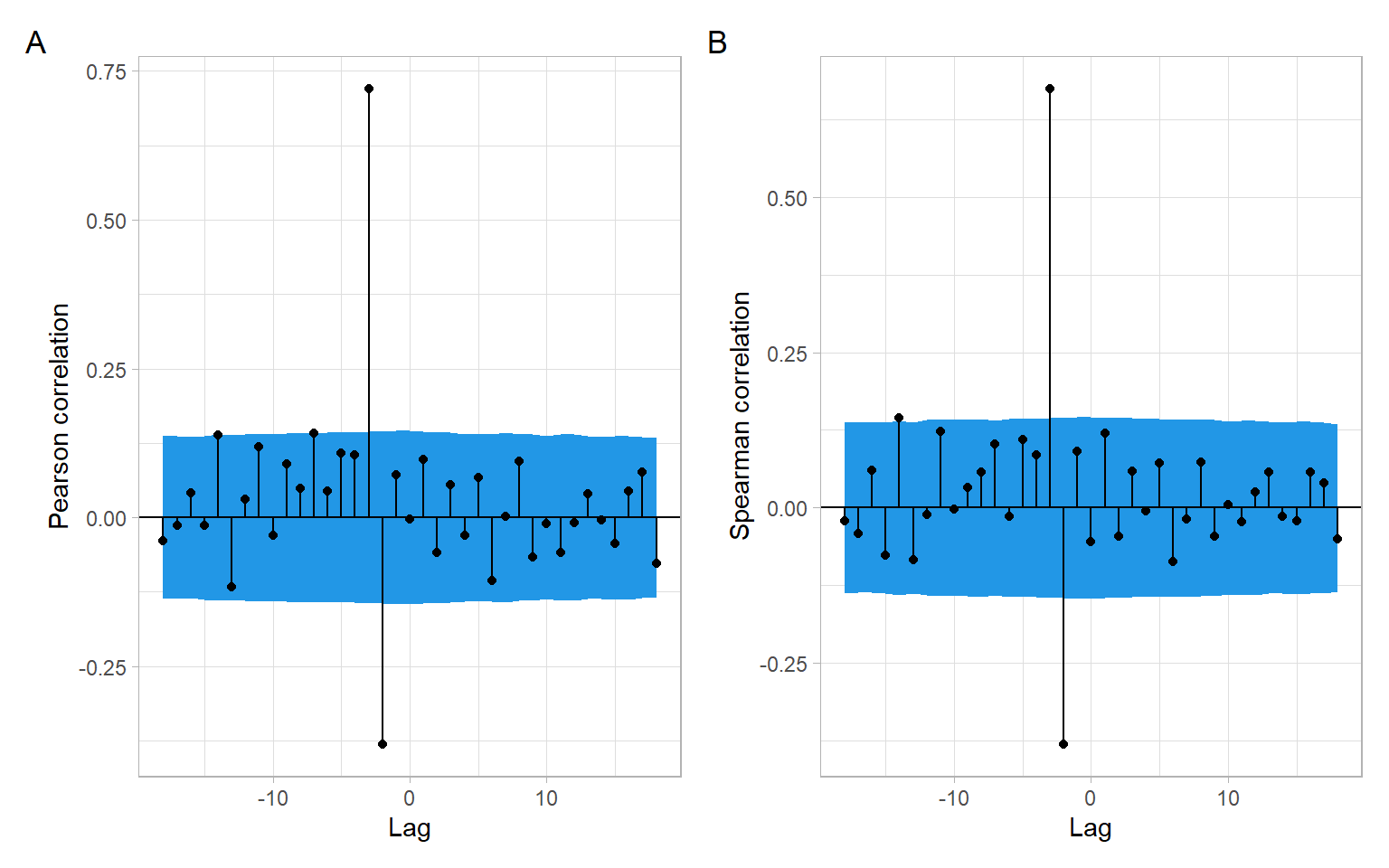

Another concern is the autocorrelation of individual time series that may lead to spurious cross-correlation (Dean and Dunsmuir 2016). Intuitively, say if \(X_t\) and \(Y_t\) are independent from each other but both \(X_t\) and \(Y_t\) are positively autocorrelated – high (or low) values in \(X_t\) follow each other, and high (or low) values in \(Y_t\) follow each other – there could be a time alignment among our considered lags \(k = 0, \pm 1, \pm 2, \dots\) when the ups (or downs) in \(X_{t+k}\) match those in \(Y_t\). This may result in spurious cross-correlation. To address the concern of autocorrelation, we can apply bootstrapping to approximate the distribution of cross-correlation coefficients corresponding not to the i.i.d. series but to stationary time series with the same autocorrelation structure as the input data. The function funtimes::ccf_boot() implements the sieve bootstrap and returns results for both Pearson and Spearman correlations (Figure 9.2).

Code

set.seed(123)

res <- funtimes::ccf_boot(diff(BJsales.lead), diff(BJsales),

B = 10000, plot = "none")

p1 <- res %>%

ggplot(aes(x = Lag, y = r_P, xend = Lag, yend = 0)) +

geom_ribbon(aes(ymin = lower_P, ymax = upper_P), fill = 4) +

geom_point() +

geom_segment() +

geom_hline(yintercept = 0) +

ylab("Pearson correlation")

p2 <- res %>%

ggplot(aes(x = Lag, y = r_S, xend = Lag, yend = 0)) +

geom_ribbon(aes(ymin = lower_S, ymax = upper_S), fill = 4) +

geom_point() +

geom_segment() +

geom_hline(yintercept = 0) +

ylab("Spearman correlation")

p1 + p2 +

plot_annotation(tag_levels = 'A')

funtimes::ccf_boot() automatically produces a base-R plot that was suppressed here to save the results as the object res and use the package ggplot2 for the plots.

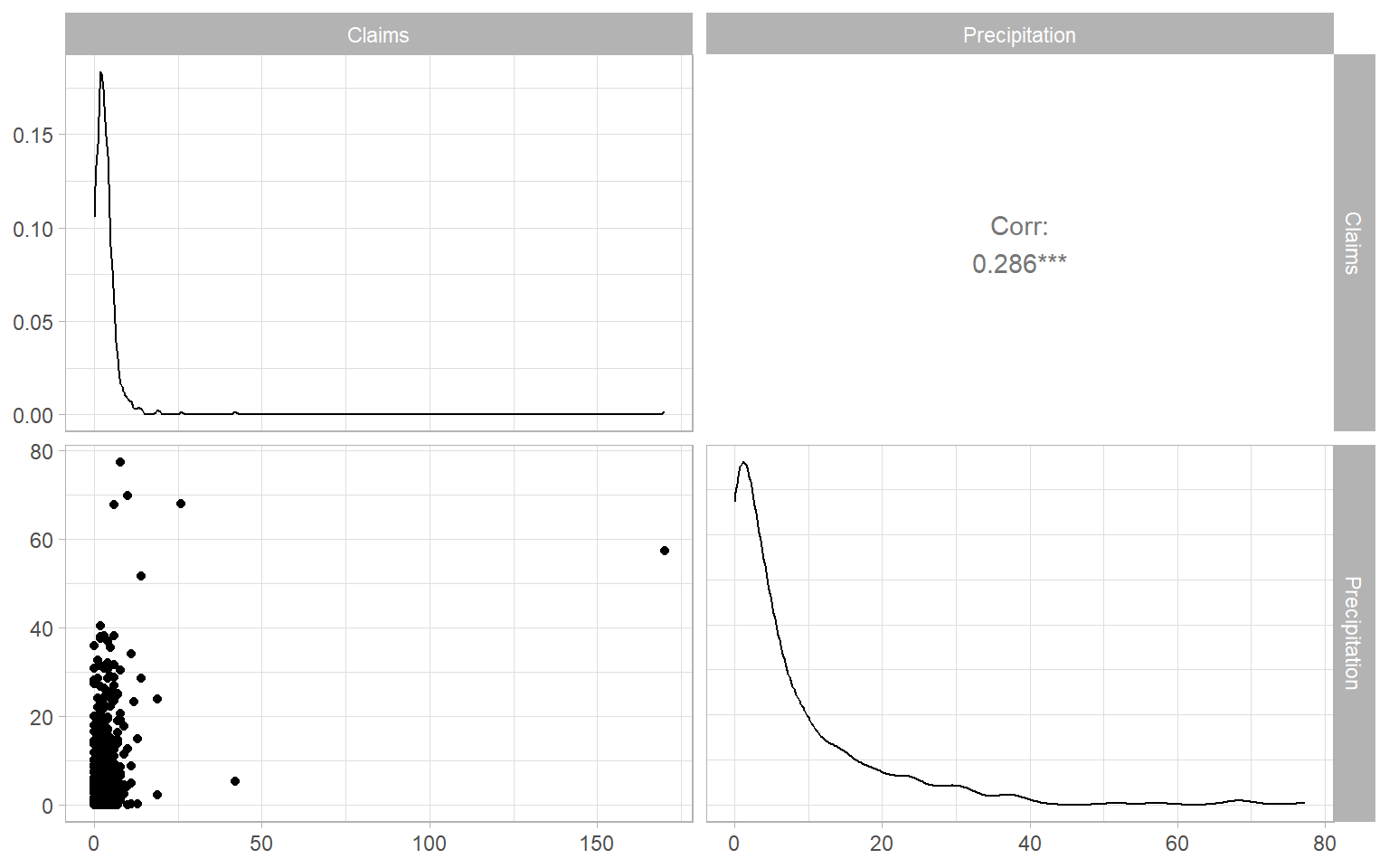

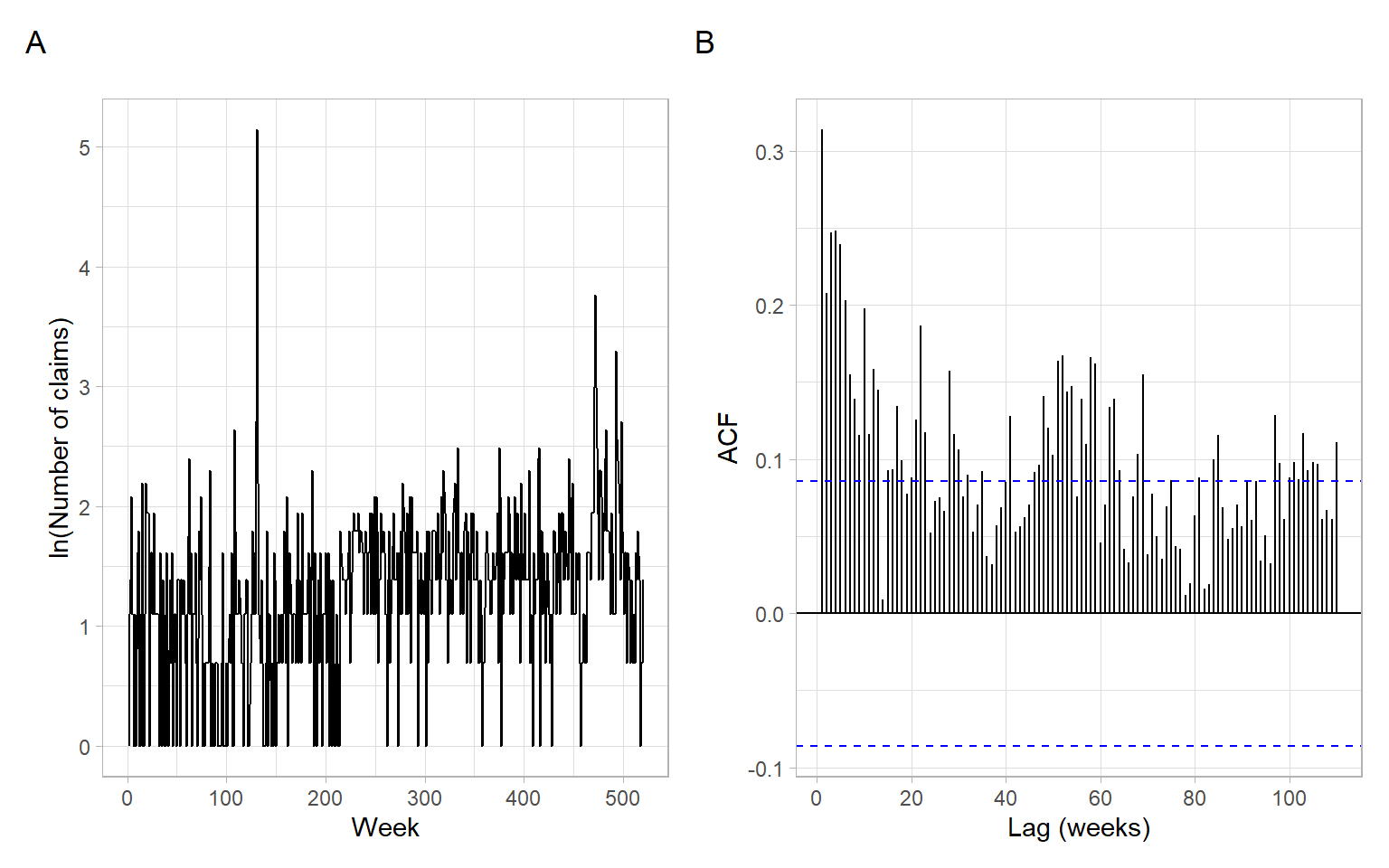

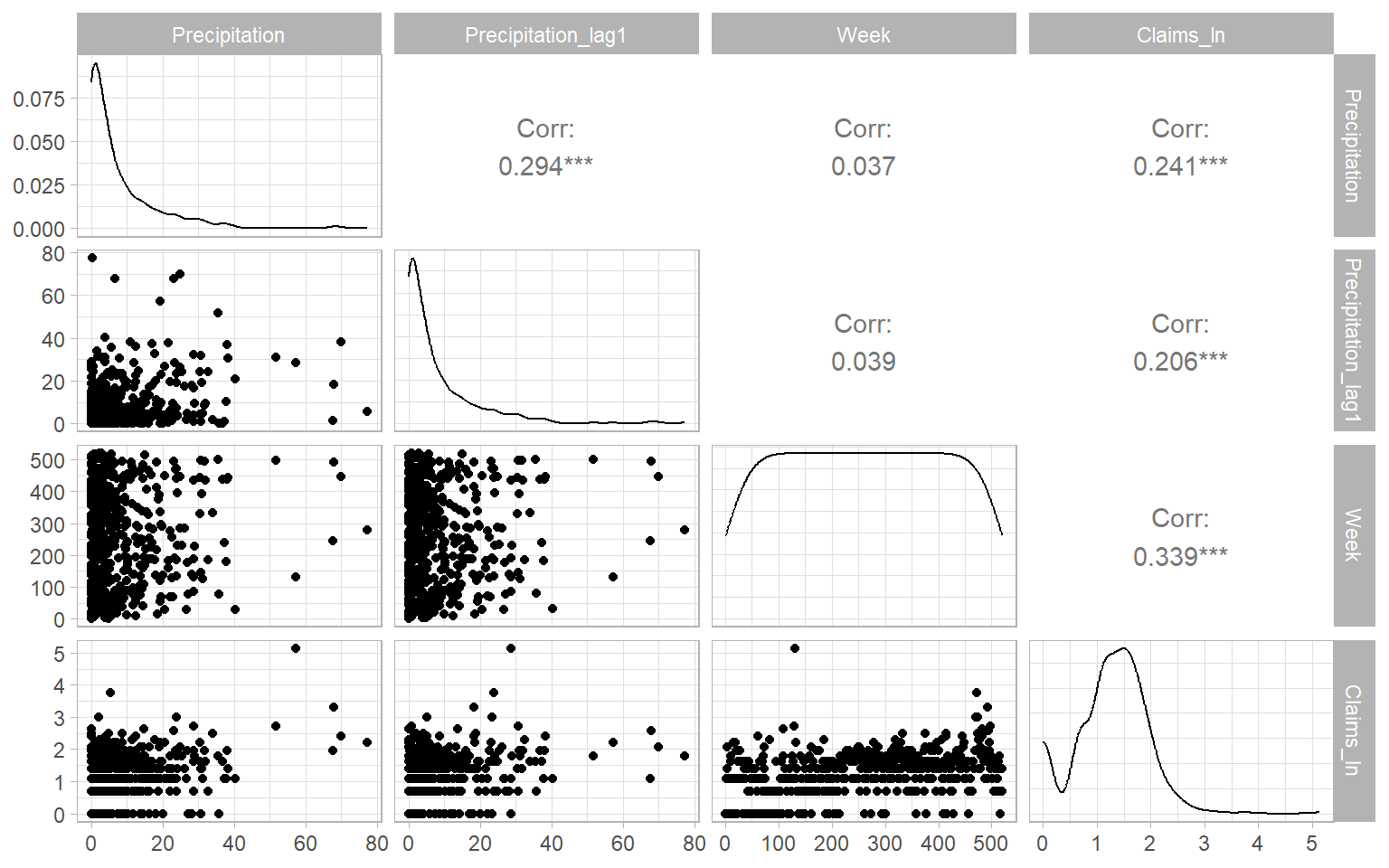

Finally, remember that the reported Pearson correlations in Figure 9.1 and Figure 9.2 A measure the direction and strength of linear relationships, while Spearman correlations in Figure 9.2 B correspond to monotonic relationships. To further investigate whether the lagged relationships are linear, monotonic, or of some other form, we need to plot the lagged scatterplots (Figure 9.3). Also see other functions in the package astsa, such as the function astsa::lag1.plot() for exploring nonlinear autocorrelations.

9.3 Linear regression with ARMA errors

We begin with the simplest case, a constant mean model, where the residuals are serially correlated and follow an AR(1) model, that is \[ Y_{t} = \beta_{0} + \epsilon_{t}, \tag{9.2}\] where \(\epsilon_{t} \sim\) AR(1), i.e., \[ \epsilon_{t} = \phi \epsilon_{t - 1} + a_{t}. \tag{9.3}\]

Here \(\phi\) is a real number satisfying \(0 < |\phi| < 1\); \(a_{t}\) is white noise with zero mean and the variance \(\nu^{2}\), i.e., \(a_{t} \sim \mathrm{WN}(0,\nu^2)\). We also assume that \(\mathrm{cov}(a_{t}, \epsilon_{s}) = 0\) for all \(s < t\) (i.e., that the residuals \(\epsilon_s\) are not correlated with the future white noise \(a_t\)).

Equation 9.3 allows us to express Equation 9.2 as \[ Y_{t} = \beta_{0} + \phi \epsilon_{t - 1} + a_{t}. \tag{9.4}\]

The advantage of this representation is that the new residuals \(a_{t}\) satisfy the OLS assumptions. In particular, since \(a_{t}\) is white noise, \(a_{t}\) is homoskedastic and uncorrelated.

We shall also assume that \(a_{t}\) are normally distributed (for justification of the construction of confidence intervals and prediction intervals). However, even if \(a_{t}\) are not normal, \(a_{t}\) are uncorrelated, which is a big improvement over the serially correlated \(\epsilon_{t}\).

Our goal is to remove the \(\epsilon_{t}\) entirely from the constant mean model and replace them with \(a_{t}\) acting as new residuals. This can be done as follows. First, write Equation 9.2 for \(t-1\) and multiply both sides by \(\phi\), \[ \phi Y_{t -1} = \phi \beta_{0} + \phi \epsilon_{t - 1}. \tag{9.5}\]

Taking the difference (Equation 9.4 minus Equation 9.5) eliminates \(\epsilon_{t}\) from the model: \[ \begin{split} Y_{t} - \phi Y_{t - 1} &= \left( \beta_{0} + \phi \epsilon_{t - 1} + a_{t} \right) - \left( \phi \beta_{0} + \phi \epsilon_{t - 1} \right) \\ &= (1 - \phi) \beta_{0} + a_{t}. \end{split} \]

Therefore we can rewrite the constant mean model in Equation 9.2 as \[ Y_{t} = (1 - \phi) \beta_{0} + \phi Y_{t - 1} + a_{t}. \tag{9.6}\]

In general, for any multiple linear regression \[ Y_{t} = \beta_{0} + \sum^{k}_{j =1} \beta_{j} X_{t,j} + \epsilon_{t}, ~~ \text{where} ~~ \epsilon_{t} \sim \mbox{AR(1)}, \tag{9.7}\] we can perform a similar procedure of eliminating \(\epsilon_{t}\).

This elimination procedure leads to the alternate expression \[ Y_{t} = (1 - \phi) \beta_{0} + \phi Y_{t -1} + \sum^{k}_{j = 1} \beta_{j} (X_{t,j} - \phi X_{t-1, j}) + a_{t}, \tag{9.8}\] where \(a_{t}\) is white noise, i.e., homoskedastic with constant (zero) mean and uncorrelated. See Appendix B describing the method of generalized least squares and an example of \(k=1\).

Note that rewriting the model in this way pulls the autocorrelation parameter for the old residuals, \(\phi\), into the regression part of the model. Thus there are now \(k + 2\) unknown regression parameters (\(\beta_{0}, \beta_{1}, \dots, \beta_{k}\), and \(\phi\)). The introduction of an additional parameter into the regression part of the model can be regarded as the price to be paid for extracting new residuals \(a_{t}\) that satisfy the OLS assumptions.

Note that the new residuals \(a_{t}\) have smaller variance than the \(\epsilon_{t}\). In fact, \[ \begin{split} \sigma^{2} & = \mbox{var} (\epsilon_{t} ) = \mbox{var} (\phi \epsilon_{t - 1} + a_{t}) \\ \\ & = \phi^{2} \mbox{var}(\epsilon_{t - 1}) + \mbox{var} (a_{t} ) ~~~ \mbox{since} ~~ \mbox{cov}(a_{t}, \epsilon_{t - 1}) = 0\\ \\ & = \phi^{2}\sigma^{2} + \nu^{2}, \end{split} \] leading to the relation \[ \nu^{2} = \sigma^{2} (1 - \phi^{2} ). \tag{9.9}\]

However, comparing Equation 9.6 with Equation 9.6, and Equation 9.8 with Equation 9.7, we see that the rewritten form of the model is not linear in terms of the parameters \(\beta_{0}, \beta_{1}, \dots, \beta_{k}\) and \(\phi\). For example, the intercept term \((1 - \phi) \beta_{0}\) involves a product of two of the parameters. This nonlinearity makes the OLS, implemented in the R functions lm() and lsfit(), a poor method for obtaining parameter estimates. Instead, we will use the method of maximum likelihood (ML) carried out through such R functions as arima().

The function arima() allows us to input the model in its original form, as in Equation 9.7. It then internally rewrites the model to put it in the form of Equation 9.8. (So we do not have to rewrite the model!) It then makes the assumption that the \(a_t\) are normal and constructs the multivariate normal likelihood function \[

L (Y_{1} , \dots , Y_{n}; Q ),

\] where \(n\) is the sample size and \(Q\) is the vector of all unknown parameters. In general, for an AR(1) model with \(k\) original predictors, we have \(Q = (\phi, \beta_{0}, \beta_{1}, \dots, \beta_{k}, \nu^{2})\). Recall that \(\nu^{2} = \mathrm{var}(a_{t}) = \mathrm{cov}(a_{t} , a_{t})\).

The function arima() then uses the historical data \(Y_{1}, \dots, Y_{n}\) to find the parameter estimates \[

\hat{Q} = \left( \hat{\phi}, \hat{\beta}_{0} , \hat{\beta}_{1} , \dots, \hat{\beta}_{k} , \hat{\nu}^2 \right),

\] which maximize the likelihood \(L\). These estimates (and other things, such as the standard errors of the estimates) can be saved to an output object in R. We will use an example to illustrate the use and interpretation of the function arima().

Moreover, we can extend the regression model in Equation 9.7 with AR(1) errors to a model with a more general form of errors, ARMA, by assuming \(\epsilon_t \sim \text{ARMA}(p,q)\) (see Chapter 6.6 in Brockwell and Davis 2002 and https://robjhyndman.com/hyndsight/arimax/): \[

\begin{split}

Y_t &= \sum^{k}_{j =1} \beta_{j} X_{t,j} + \epsilon_{t},\\

\epsilon_{t} &= \phi_1 \epsilon_{t-1} + \dots + \phi_p \epsilon_{t-p} + \theta_1 a_{t-1} + \dots + \theta_q a_{t-q} + a_t.

\end{split}

\tag{9.10}\] This model in Equation 9.10 can be specified in R functions arima(), fable::ARIMA(), forecast::Arima(), and forecast::auto.arima().

The R package forecast has been superseded by the new package fable that uses an alternate parameterization of constants, see ?fable::ARIMA.

Remember that the variables \(Y_t\) and \(X_{t,j}\) (\(j = 1,\dots,k\)) should be detrended prior to the analysis (to avoid spurious regression results, see the previous lecture on dealing with trends in regression). If differencing is chosen as the method of detrending, the orders of differences \(d\) and \(D\) can be specified directly within the mentioned ARIMA functions (so R will do the differencing for us).

9.4 ARIMAX

ARIMAX (‘X’ stands for ‘external regressor’) models are closely related to Equation 9.10, but there is an important difference. For simplicity of notation, we can present an ARMAX(\(p,q\)) model that is a regular ARMA(\(p,q\)) model for \(Y_t\) plus the external regressors: \[

\begin{split}

Y_t &= \phi_1 Y_{t-1} + \dots + \phi_p Y_{t-p} + \theta_1 a_{t-1} + \dots + \theta_q a_{t-q} + a_t\\

&+\sum^{k}_{j =1} \beta_{j} X_{t,j},

\end{split}

\tag{9.11}\] where \(a_t\) is still a zero-mean white noise process. Interestingly, at this time there is no convenient way to estimate this model in R. One could manually write lagged values of \(Y_t\) as external regressors (i.e., create new variables in R for \(Y_{t-1},\dots, Y_{t-p}\)), use these variables in the R functions mentioned above, but force \(p=0\) (e.g., Soliman et al. 2019 used the functions from the R package forecast).

The difference between Equation 9.10 and Equation 9.11 is the presence of lagged values of the response variable, \(Y_t\), in Equation 9.11. As Hyndman points out, regression coefficients \(\beta_j\) in Equation 9.11 lose their interpretability compared with usual regression and do not show the effect on \(Y_t\) when \(X_t\) increased by one. Instead, \(\beta\)’s in ARMAX Equation 9.11 are interpreted conditional on the value of previous values of the response variable (https://robjhyndman.com/hyndsight/arimax/). Therefore, model formulation as in Equation 9.10 may be preferred.

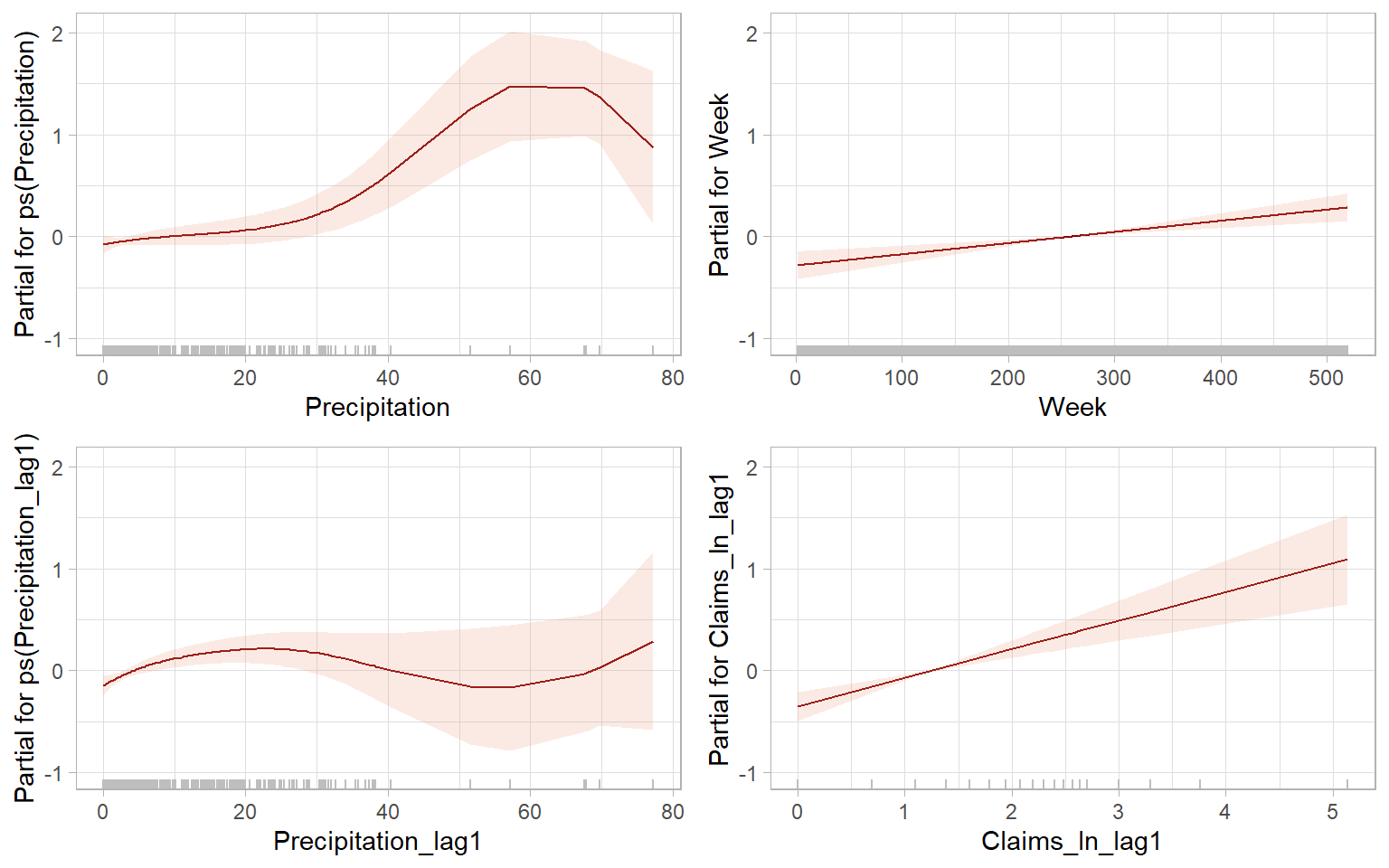

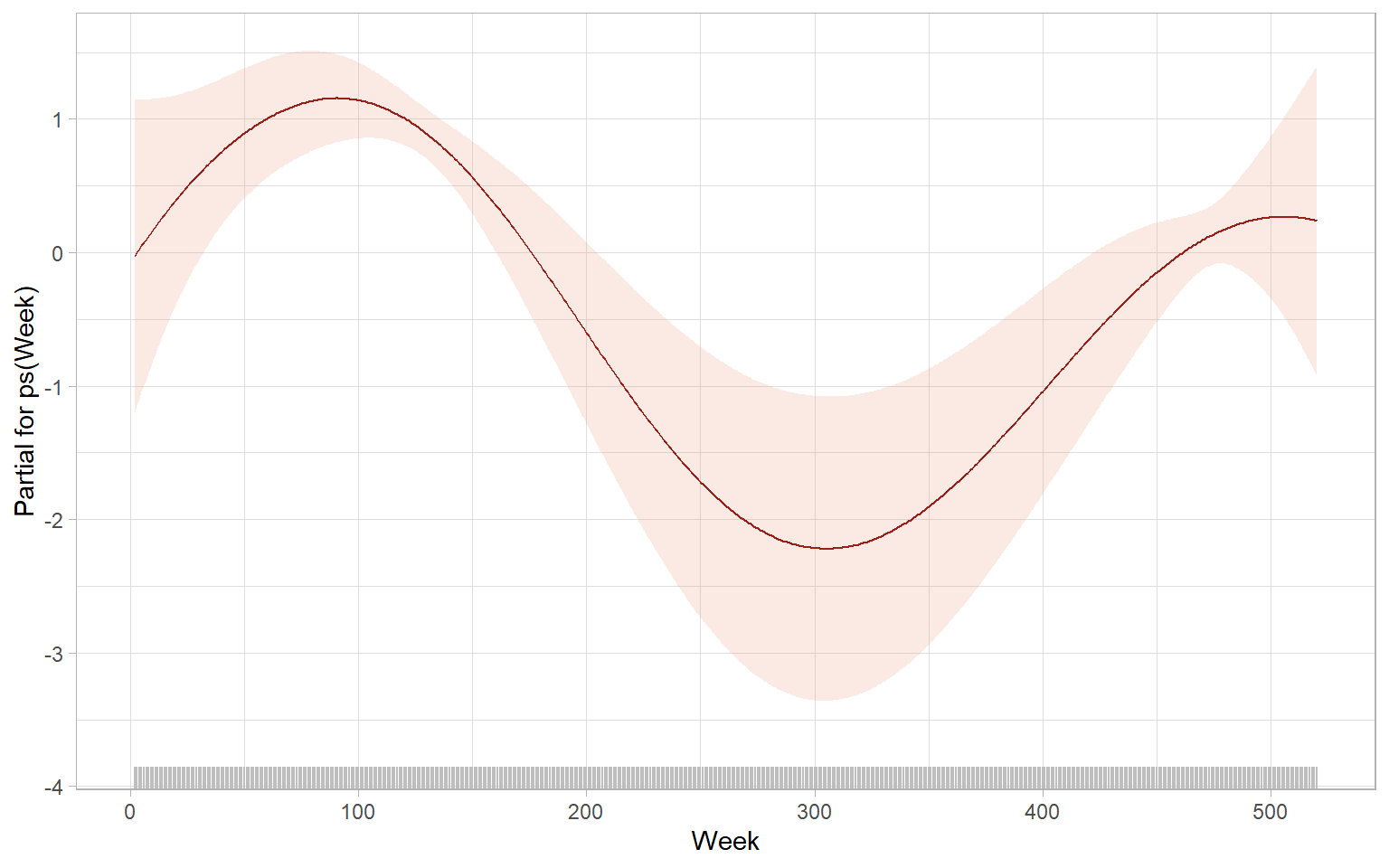

Other applicable models include models with mixed effects such as for repeated measures ANOVA (e.g., implemented in R using nlme::gls() and nlme::lme(), see different correlation structures; without a grouping factor, the results of nlme::lme(..., correlation = corARMA(...)) should be similar to estimating model in Equation 9.10). Other regression functions often borrow the functionality (and syntax) of the package nlme for estimating random effects, so autocorrelated residuals can be incorporated into a generalized additive model, GAM, mgcv::gamm(); generalized additive model for location scale and shape, GAMLSS, gamlss::gamlss(), which also can be used just as GAM, if scale and shape parameters are not modeled. A slightly different solution is possible using a generalized autoregressive moving average model (GARMA) demonstrated in Appendix D, see gamlss.util::garmaFit().

9.5 GAMLSS

Stasinopoulos and Rigby (2007) provide an extension of a generalized additive model to \(k=1,2,3,4\) parameters \(\boldsymbol{\theta}_k\) of a distribution in a so-called generalized additive model for location scale and shape (GAMLSS). The \(k\) parameters represent the location parameter \(\mu\) (it is what we typically model with regression models), scale \(\sigma\), and two shape parameters: skewness and kurtosis. The model can be further generalized for \(k>4\) if needed. It allows us to fit \(k\) individual models to study relationships between regressors \(\boldsymbol{x}\) and parameters of the response distribution: \[ g_k(\boldsymbol{\theta}_k) = h_k\left(\boldsymbol{X}_k,\boldsymbol{\beta}_k\right) + \sum_{j=1}^{J_k}h_{jk}(\boldsymbol{x}_{jk}), \tag{9.12}\] where \(k=1\) produces model for the mean; \(h_k(\cdot)\) and \(h_{jk}(\cdot)\) are nonlinear functions; \(\boldsymbol{\beta}_k\) is a parameter vector of length \(J_k\); \(\boldsymbol{X}_k\) is an \(n\times J_k\) design matrix; \(\boldsymbol{x}_{jk}\) are vectors of length \(n\). The terms in the GAMLSS provide a flexible framework to specify nonlinearities, random effects, and correlation structure as in the mixed effects models (Zuur et al. 2009); see Table 3 by Stasinopoulos and Rigby (2007) for the possible specifications of the additive terms. Hence, a model as in Equation 9.12 may accommodate non-normal distributions, possibly nonlinear relationships, and spatiotemporal dependencies in the data.

9.6 Granger causality

The Granger causality (Granger 1969; Kirchgässner and Wolters 2007) concept is based on predictability, which is why we should consider it in the time series course. Pearl causality is based on the analysis of interventions (Rebane and Pearl 1987; Pearl 2009).

Let \(I_t\) be the total information set available at the time \(t\). This information set includes the two time series \(X\) and \(Y\). Let \(\bar{X}_t\) be the set of all current and past values of \(X\), i.e., \(\bar{X}_t = \{X_{t}, X_{t-1}, \dots, X_{t-k}, \dots \}\) and analogously of \(Y\). Let \(\sigma^2(\cdot)\) be the variance of the corresponding forecast error.

Difference of two sets, \(A\) and \(B\), is denoted by \(A \setminus B\); but sometimes the minus sign is used, \(A - B\).

Granger causality

\(X\) is (simply) Granger causal to \(Y\) if future values of \(Y\) can be predicted better, i.e., with a smaller forecast error variance, if current and past values of \(X\) are used: \[ \sigma^2(Y_{t+1}|I_t) < \sigma^2(Y_{t+1}|I_t \setminus \bar{X}_t). \tag{9.15}\]

Instantaneous Granger causality

\(X\) is instantaneously Granger causal to \(Y\) if the future value of \(Y\), \(Y_{t+1}\), can be predicted better, i.e., with a smaller forecast error variance, if the future value of \(X\), \(X_{t+1}\), is used in addition to the current and past values of \(X\): \[ \sigma^2(Y_{t+1}|\{I_t, X_{t+1}\}) < \sigma^2(Y_{t+1}|I_t ). \tag{9.16}\]

Feedback

There is feedback between \(X\) and \(Y\) if \(X\) is causal to \(Y\) and \(Y\) is causal to \(X\). Feedback is only defined for the case of simple causal relations.

The test for Equation 9.15 and Equation 9.16 is, essentially, an \(F\)-test comparing two nested models: with additional predictors \(X\) and without. In other words, consider the model: \[ Y_t = \beta_0 + \sum_{k=1}^{k_1}\beta_k Y_{t-k} + \sum_{k=k_0}^{k_2}\alpha_k X_{t-k} + U_t \tag{9.17}\] with \(k_0 = 1\). An \(F\)-test is applied to test the null hypothesis, H\(_0\): \(\alpha_1 = \alpha_2 = \dots = \alpha_{k_2} = 0\). By switching \(X\) and \(Y\) in Equation 9.17, it can be tested whether a simple causal relation from \(Y\) to \(X\) exists. There is a feedback relation if the null hypothesis is rejected in both directions (\(X\rightarrow Y\) and \(Y\rightarrow X\)). To test whether there is an instantaneous causality, we finally set \(k_0 = 0\) and perform a \(t\) or \(F\)-test for the null hypothesis H\(_0\): \(\alpha_0 = 0\).

The problem with this test is that the results are strongly dependent on the number of lags of the explanatory variable, \(k_2\). There is a trade-off: the more lagged values we include, the better the influence of this variable can be captured. This argues for a high maximal lag. On the other hand, the power of this test is lower the more lagged values are included (Chapter 3 of Kirchgässner and Wolters 2007). Two general procedures can be used to select the lags: inspecting the sensitivity of results to different \(k_2\) (sensitivity analysis) or one of the different information criteria guiding model selection.

The lmtest::grangertest() options set one value to both \(k_1\) and \(k_2\) in Equation 9.17. In our example, it was \(k_1 = k_2 = 1\). The ‘manual’ test using the function anova() can be used for models with \(k_1 = k_2\) or \(k_1 \neq k_2\).

9.7 Conclusion

Multivariate models are still much more difficult to fit than univariate ones. Multiple regression remains a treacherous procedure when applied to time series data. Many observed time series exhibit nonlinear characteristics, but nonlinear models often fail to give better out-of-sample forecasts than linear models, perhaps because the latter are more robust to departures from model assumptions. It is always a good idea to end with the so-called eyeball test. Plot the forecasts on a time plot of the data and check that they look intuitively reasonable (Chatfield 2000).